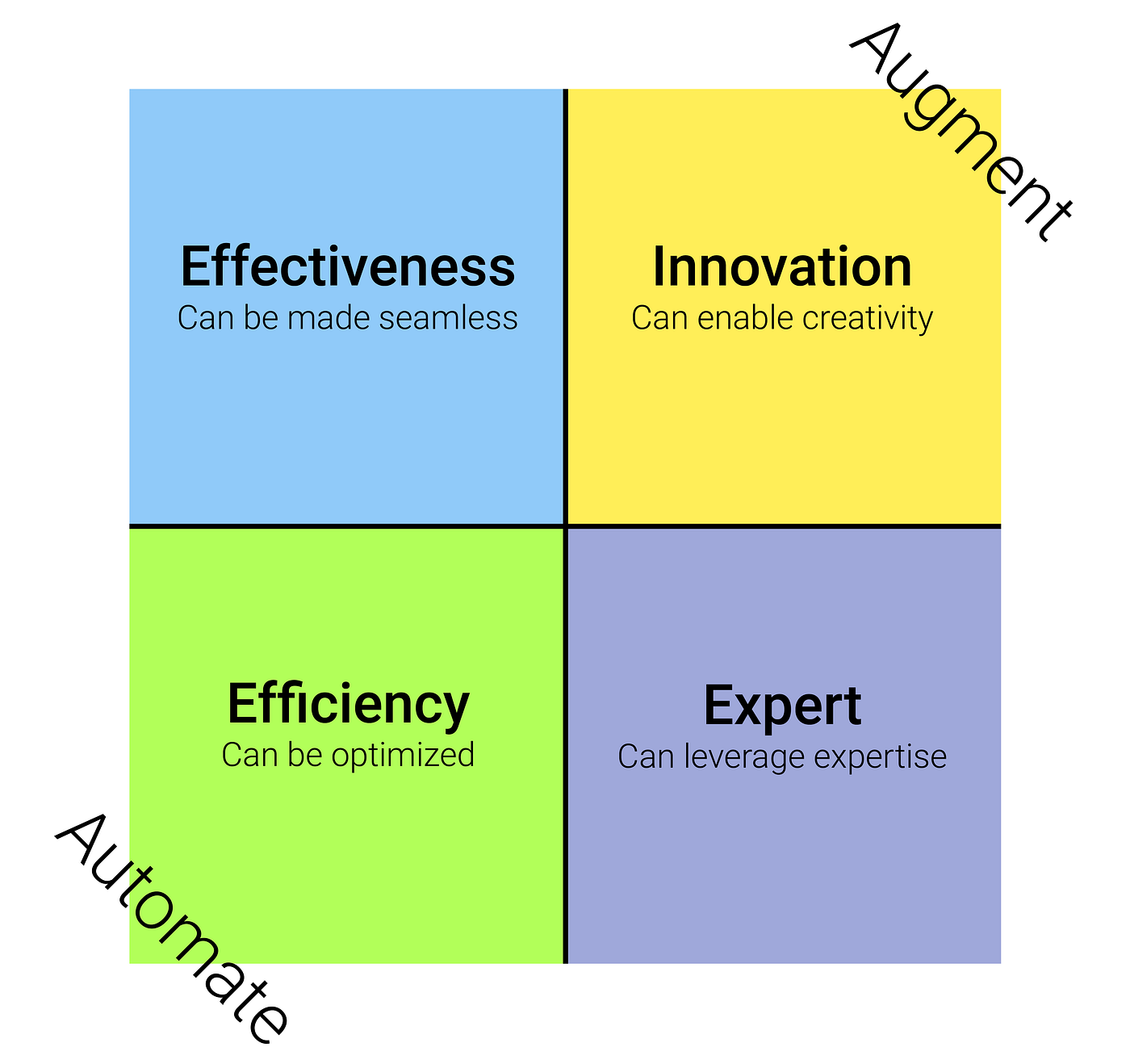

AI governance frameworks could help organizations learn, govern, monitor, and mature AI adoption.This paper explores the potential risks of AI and provides a standardized practical categorization of these risks: Data Related Risks, AI/ML Attacks, Testing and Trust, and Compliance.AIRS believes there are significant potential benefits of AI and that its adoption within financial services presents opportunities to improve both business and societal outcomes when risks are managed responsibly.The views expressed in this paper are those of the individual contributors and do not constitute the views of any of the firms with which the contributors are associated or by which they are employed. They should assess, implement, and tailor their firms’ AI/ML programs and respective controls as appropriate for their business model, product and service mix, and applicable legal and regulatory requirements. Readers are encouraged to consider the information provided in this paper for reference and discussion purposes. This paper is intended for discussion purposes only and is not intended to serve as a prescriptive roadmap for implementing AI/ML tools or as a comprehensive inventory of risk associated with the use of AI. This white paper provides AIRS’s views on potential approaches to AI governance for financial services including potential risks, risk categorization, interpretability, discrimination, and risk mitigation, in particular, as applied to the financial industry.

As financial services firms evaluate the potential applications of artificial intelligence (AI), for example: to enhance the customer experience and garner operational efficiencies, Artificial Intelligence/Machine Learning (AI/ML) Risk and Security (“AIRS”) is committed to furthering this dialogue and has drafted the following overview discussing AI implementation and the corresponding potential risks firms may wish to consider in formulating their AI strategy.

0 kommentar(er)

0 kommentar(er)